|

|

本帖最后由 onederfa 于 2024-6-17 15:31 编辑

PVE8.2+i7-9850h+3块4T的MAP 1602主控固态(致钛 7100 *1 + 宏碁GM7 *2)

内核是6.8.4

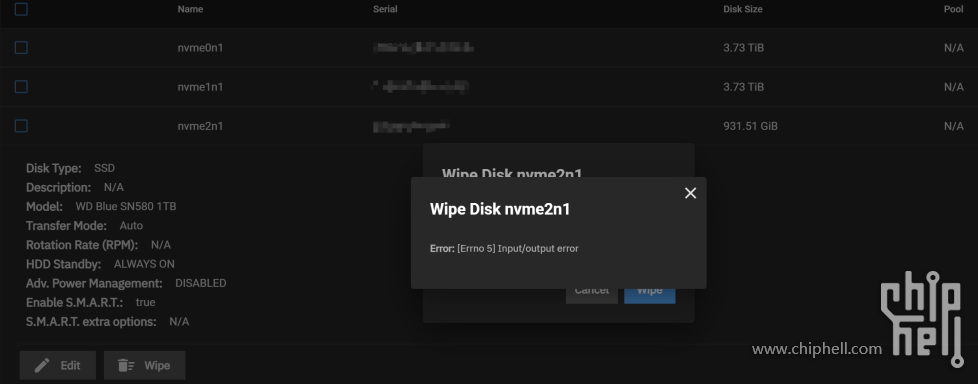

- 问题:PVE三块硬盘都能识别和操作,也可以成功直通nvme到truenas scale虚拟机,虚拟机里也能识别。但添加存储池时,swipe其中一个m2插槽的硬盘就会报IO error 5(3个 硬盘轮流换上去都报错),另外两个硬盘位正常。

- m2插槽有问题。但PVE宿主机三个硬盘都能识别和正常运行,宿主机系统换成Windows也能正常识别并运行。排除

- truenas虚拟机系统有问题。但尝试把硬盘直通到Windows虚拟机,也是唯独这个插槽上的硬盘用不了。排除

- map 1602主控的坑。换上了一块1T的SN580还是不行,更换了站里大佬的文章联芸map1602改过的pve内核还是不行。

因此大概率会是PVE系统或者nvme直通的问题,但为什么另外两个硬盘位都正常,唯独这一个能正常直通却在虚拟机里面用不了?就很吊诡。用排除法来来回回试,完全不知道是哪里出了问题,求懂的大佬看看是怎么回事🙏🙏🙏

log里面有- Jun 17 15:20:13 pve kernel: vfio-pci 0000:70:00.0: Unable to change power state from D3cold to D0, device inaccessible

尝试BIOS禁用APSM,grub添加pcie_aspm=off nvme_core.default_ps_max_latency_us=0都不行

- GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt pcie_aspm=off nvme_core.default_ps_max_latency_us=0"

truenas虚拟机报错硬盘的fio测试有io error:

- read: (g=0): rw=read, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=1

- write: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=1

- randread: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=1

- randwrite: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=1

- fio-3.33

- Starting 4 processes

- fio: io_u error on file /dev/nvme2n1: Input/output error: write offset=700571648, buflen=4096

- fio: pid=98259, err=5/file:io_u.c:1876, func=io_u error, error=Input/output error

- fio: io_u error on file /dev/nvme2n1: Input/output error: write offset=0, buflen=4096

- fio: pid=98257, err=5/file:io_u.c:1876, func=io_u error, error=Input/output error

- Jobs: 2 (f=2): [R(1),X(1),r(1),X(1)][100.0%][r=216MiB/s][r=55.3k IOPS][eta 00m:00s]

- read: (groupid=0, jobs=4): err= 5 (file:io_u.c:1876, func=io_u error, error=Input/output error): pid=98256: Sun Jun 16 23:44:54 2024

- read: IOPS=30.7k, BW=120MiB/s (126MB/s)(7202MiB/60010msec)

- slat (nsec): min=1065, max=146684, avg=2187.12, stdev=1327.62

- clat (nsec): min=452, max=72715k, avg=62330.69, stdev=1329730.42

- lat (usec): min=11, max=72717, avg=64.52, stdev=1329.75

- clat percentiles (usec):

- | 1.00th=[ 13], 5.00th=[ 14], 10.00th=[ 14], 20.00th=[ 15],

- | 30.00th=[ 15], 40.00th=[ 16], 50.00th=[ 16], 60.00th=[ 17],

- | 70.00th=[ 18], 80.00th=[ 19], 90.00th=[ 27], 95.00th=[ 30],

- | 99.00th=[ 86], 99.50th=[ 98], 99.90th=[30278], 99.95th=[43779],

- | 99.99th=[43779]

- bw ( KiB/s): min=40644, max=229472, per=99.70%, avg=122532.77, stdev=55151.28, samples=238

- iops : min=10161, max=57368, avg=30633.02, stdev=13787.84, samples=238

- lat (nsec) : 500=0.01%, 750=0.01%, 1000=0.01%

- lat (usec) : 2=0.01%, 4=0.01%, 10=0.01%, 20=82.67%, 50=14.97%

- lat (usec) : 100=1.90%, 250=0.32%, 500=0.01%, 750=0.01%, 1000=0.01%

- lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%, 20=0.01%, 50=0.11%

- lat (msec) : 100=0.01%

- cpu : usr=2.84%, sys=6.81%, ctx=1843437, majf=6, minf=69

- IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

- submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

- complete : 0=0.1%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

- issued rwts: total=1843826,2,0,0 short=0,0,0,0 dropped=0,0,0,0

- latency : target=0, window=0, percentile=100.00%, depth=1

- Run status group 0 (all jobs):

- READ: bw=120MiB/s (126MB/s), 120MiB/s-120MiB/s (126MB/s-126MB/s), io=7202MiB (7552MB), run=60010-60010msec

- Disk stats (read/write):

- nvme2n1: ios=1838134/2, merge=0/0, ticks=109134/525, in_queue=109660, util=100.00%

- read: (g=0): rw=read, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=1

- ...

- write: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=1

- ...

- randread: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=1

- ...

- randwrite: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=1

- ...

- fio-3.33

- Starting 16 processes

- Jobs: 16 (f=16): [R(4),W(4),r(4),w(4)][100.0%][r=275MiB/s,w=1606MiB/s][r=70.5k,w=411k IOPS][eta 00m:00s]

- read: (groupid=0, jobs=16): err= 0: pid=2128: Mon Jun 17 00:14:10 2024

- read: IOPS=71.6k, BW=280MiB/s (293MB/s)(16.4GiB/60001msec)

- slat (nsec): min=1053, max=38148, avg=2475.01, stdev=1204.03

- clat (nsec): min=732, max=17269k, avg=108465.12, stdev=189486.51

- lat (usec): min=13, max=17271, avg=110.94, stdev=189.49

- clat percentiles (usec):

- | 1.00th=[ 43], 5.00th=[ 45], 10.00th=[ 46], 20.00th=[ 48],

- | 30.00th=[ 53], 40.00th=[ 62], 50.00th=[ 78], 60.00th=[ 92],

- | 70.00th=[ 104], 80.00th=[ 129], 90.00th=[ 167], 95.00th=[ 202],

- | 99.00th=[ 676], 99.50th=[ 1778], 99.90th=[ 2114], 99.95th=[ 2311],

- | 99.99th=[ 4178]

- bw ( KiB/s): min=223096, max=324488, per=100.00%, avg=286252.77, stdev=1723.79, samples=952

- iops : min=55774, max=81122, avg=71563.19, stdev=430.95, samples=952

- write: IOPS=418k, BW=1634MiB/s (1713MB/s)(95.7GiB/60001msec); 0 zone resets

- slat (nsec): min=973, max=81383, avg=2096.82, stdev=873.04

- clat (nsec): min=492, max=22555k, avg=16296.29, stdev=70921.50

- lat (usec): min=8, max=22557, avg=18.39, stdev=70.93

- clat percentiles (usec):

- | 1.00th=[ 10], 5.00th=[ 11], 10.00th=[ 11], 20.00th=[ 12],

- | 30.00th=[ 12], 40.00th=[ 13], 50.00th=[ 13], 60.00th=[ 14],

- | 70.00th=[ 14], 80.00th=[ 15], 90.00th=[ 16], 95.00th=[ 17],

- | 99.00th=[ 37], 99.50th=[ 43], 99.90th=[ 1565], 99.95th=[ 1795],

- | 99.99th=[ 2089]

- bw ( MiB/s): min= 1461, max= 1684, per=100.00%, avg=1635.05, stdev= 6.45, samples=952

- iops : min=374034, max=431212, avg=418572.69, stdev=1652.47, samples=952

- lat (nsec) : 500=0.01%, 750=0.01%, 1000=0.01%

- lat (usec) : 2=0.01%, 4=0.01%, 10=2.41%, 20=81.08%, 50=5.24%

- lat (usec) : 100=6.29%, 250=4.37%, 500=0.27%, 750=0.08%, 1000=0.02%

- lat (msec) : 2=0.20%, 4=0.04%, 10=0.01%, 20=0.01%, 50=0.01%

- cpu : usr=6.82%, sys=12.45%, ctx=29393451, majf=0, minf=216

- IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

- submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

- complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

- issued rwts: total=4293850,25100258,0,0 short=0,0,0,0 dropped=0,0,0,0

- latency : target=0, window=0, percentile=100.00%, depth=1

- Run status group 0 (all jobs):

- READ: bw=280MiB/s (293MB/s), 280MiB/s-280MiB/s (293MB/s-293MB/s), io=16.4GiB (17.6GB), run=60001-60001msec

- WRITE: bw=1634MiB/s (1713MB/s), 1634MiB/s-1634MiB/s (1713MB/s-1713MB/s), io=95.7GiB (103GB), run=60001-60001msec

- Disk stats (read/write):

- nvme2n1: ios=4285430/25063631, merge=0/0, ticks=449166/338480, in_queue=787646, util=99.91%

truenas报错

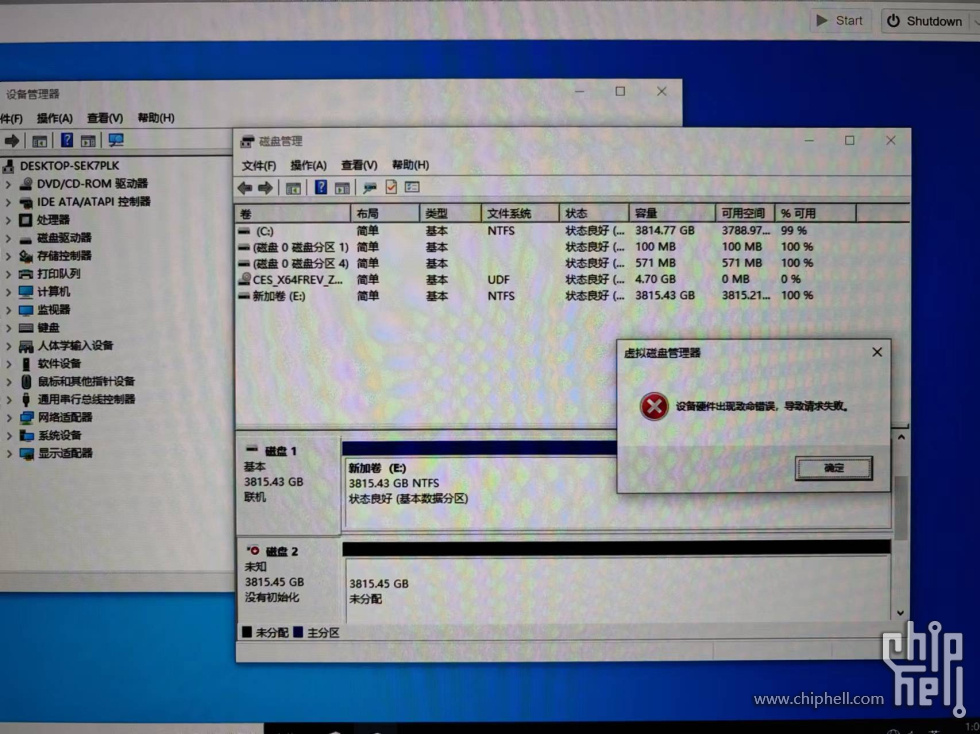

win10虚拟机格式报错

Windows虚拟机格式化报错

3条nvme,计算卡的两个nvme连着南桥,远离cpu的那条不能用

报错的是远离cpu的硬盘位

|

|

310112100042806

310112100042806